At the beginning of the year we posited that those of us who work with complex data need to move away from rows and columns and move towards graphs when thinking and working with our data. The first step is creating your model. This step is critical, and unfortunately, in my opinion, it is the least fun, mostly because this step is hard. We'd like to immediately be able to create cool network diagrams and query the data to find unique and innovative match-ups, but starting with the model is often the most non-intuitive, and over-looked step. So, while I want to jump into creating mind-blowing data visualizations, we are going to spend a bit more time with models and data entry. We want to ensure that you get the value in being able to generalize the problem you are mapping out.

Read More »Blog

My new year's resolution was to do more blogging and in January I wrote a blog about 12 things related to data, people, projects, and innovation that I believe we need to approach differently. The very first item in my 12 item list included the statement: "We need to shift away from thinking of our data as individual tables and towards realizing its one big network. We need to mine it not by building lists but by making maps."

In this blog I'd like to delve more deeply into what I meant by that and invite you to try the first step of building your own map. If you decide to enter the "mental model challenge" you can win some cool Exaptive swag and tickets to the ISPIM innovation conference in Copenhagen in June that you can join either physically or virtually! Even if you don't win, I think you'll find the exercise illuminating, so I hope you'll try it.

Read More »It's January of a new year! It's the time for new year's resolutions and fresh resolve to achieve those goals that remained elusive at the end of last year. Achieving a challenging objective often requires taking a new perspective. Since my new year's resolution is to blog more, I wanted to start out the year with a blog about 12 key areas where we've seen first-hand, working with organizations around the globe, the impact that taking a new perspective can have.

A well-known saying, probably misattributed to Einstein, says that "insanity is doing the same thing over and over again but expecting different results." If there's an objective you had trouble achieving last year, maybe this year you need to come at it from a new perspective.

Read More »Mapping the assets of your team: Superheroes as a case-study

I have wanted to build a network of comic book heroes, villains and their attributes for a while now. Viewing the powers and weaknesses across publishers, seeing how common certain attributes are (so far wealth isn’t nearly as common as flight or accelerated healing) was the ideal way for me to learn how to create a complex network using the Exaptive Studio platform with a diverse team.

Read More »Share Your Work to Innovate

I presented at the ISPIM Conference in 2020 (ISPIM: International Society of Professional Innovation Management) on how co-production can bridge the gap between academic research and industry use-cases creating shared, collective intelligence. Co-production in this context is people with different skills and areas of expertise working on a common goal. By sitting at the intersection of these divergent perspectives, co-product marries aspects of each viewpoint, bringing ideas born in research to life in industry.

Read More »The Best Innovation Management Software is Data-Driven, not Just Idea-Driven

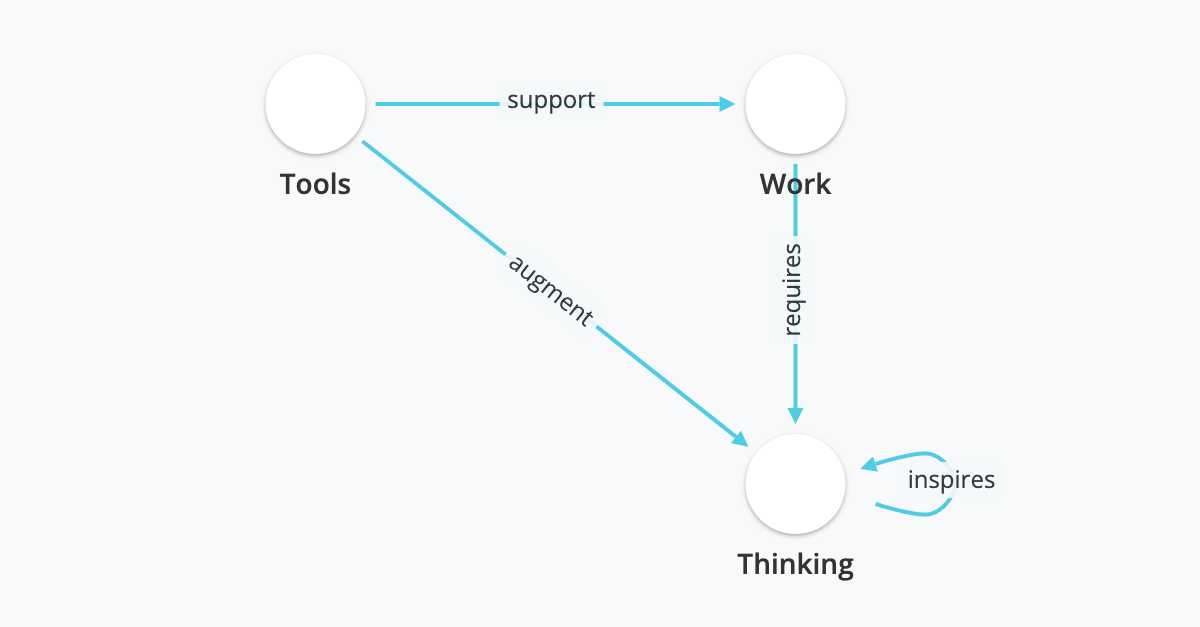

Innovation relies on new perspective. We’ve found there are two ways to get that: collaboration and data. Either one can free our attention from the daily productivity push and spur an innovation.

Read More »Use Data, Technology, and Intention to Optimize Team Building

Cleaning data is often the primary job of the data scientist, and not necessarily the one they signed up for. While getting the "words" right with computers is important, it is not fraught with the nuance that getting words right with humans is. Across disciplines the same word can have entirely different meanings and this miscommunication is only one aspect of team building that can go awry.

Read More »Innovation requires collaboration, but due to the current state of our filter bubbles, collaboration is stuck in a rut. Data science utilizing knowledge graphs and team and portfolio optimization software can help us climb out. It can increase the scale, the intentionality, and the nuance of how we collaborate. With the right data and algorithms, we can use software to optimize our teams and facilitate innovation.

Read More »I recently moved from Boston to Oklahoma City. My wife got offered a tenure-track position at the University of Oklahoma, which was too good an opportunity for her career for us to pass up. Prior to the move, I had done a lot of traveling in the US, but almost exclusively on the coasts, so I didn't know what living in the southern Midwest would bring, and I was a bit trepidatious. It has turned out to be a fantastic move. There is a thriving high-tech startup culture here. I've been able to hire some great talent out of the University, and we're now planning to build up a big Exaptive home office here. Even more important, I was delighted to find a state that was extremely focused on fostering creativity and innovation. In fact, the World Creativity Forum is being hosted here this week, and I was asked to give a talk about innovation. As I thought about what I wanted to say, I found myself thinking about . . . cowboys.

Read More »Making Service-Oriented Architecture Serve Data Applications

Bloor Group CEO Eric Kavanagh chatted with David King, CEO and founder of Exaptive recently. Their discussion looked at the ways in which service-oriented architecture (SOA) has and has not fulfilled it's promise, especially as it applies to working with data. Take a listen or read the transcript.

Read More »Recent Posts

Posts by Author

- AIBS BioScience Talks (1)

- Alanna Riederer (1)

- Austin Schwinn (2)

- Clive Higgins (3)

- Dave King (7)

- Derek Grape (2)

- Dr. Alicia Knoedler (2)

- Frank D. Evans (4)

- Jeff Johnston (1)

- Jill Macchiaverna (9)

- Josh Southerland (1)

- Ken Goulding (1)

- Luke Tucker (3)

- Matt Coatney (3)

- Matthew Schroyer (4)

- Mike Perez (10)

- Sandeep Sikka (1)

- Shannan Callies (2)

- Stephen Arra (1)

- Terri Gilbert (2)

- Tom Lambert (2)

Posts by Tag

- Innovation (19)

- collaboration (19)

- team building (17)

- Data Applications (15)

- Data Science (14)

- collaborate (13)

- new idea (13)

- Data Visualization (12)

- cognitive city (11)

- cognitive network (11)

- teambuilding (11)

- discovery (10)

- thought leadership (10)

- Exaptation (9)

- research (9)

- technology (8)

- Data Exploration (7)

- Platforms (7)

- Data + Creativity (6)

- Text Analysis (6)

- open data (6)

- software (6)

- tech (6)

- Big Data (5)

- Network Analysis (5)

- conference (5)

- ethnographics (5)

- human-computer interaction (5)

- innovation software (5)

- network diagrams (5)

- Communicating About Data (4)

- Dataflow Programming (4)

- Design (4)

- HCI (4)

- Platform (4)

- Rapid Application Development (4)

- artifact (4)

- co-production (4)

- innovation management software (4)

- startup (4)

- use case (4)

- women in tech (4)

- attribute (3)

- entrepreneurship (3)

- ethnographic (3)

- ethnography (3)

- Abstraction (2)

- Data-driven Decision Making (2)

- Machine Learning (2)

- PubMed® Explorer (2)

- User Interface (2)

- algorithm (2)

- entrepreneur (2)

- 3d Visualization (1)

- Financial (1)

- Netflix (1)

- building models (1)

- hackathon (1)

- hairballs (1)

- interdisciplinary (1)

- knowledge graph (1)